OBIEE 12c hangs at startup - Starting AdminServer …

Running the OBIEE 12c startup on Windows: C:\app\oracle\fmw\user_projects\domains\bi\bitools\bin\start.cmd Just hangs at: Starting AdminServer ... No CPU being consumed, very odd. But then … …

Running the OBIEE 12c startup on Windows: C:\app\oracle\fmw\user_projects\domains\bi\bitools\bin\start.cmd Just hangs at: Starting AdminServer ... No CPU being consumed, very odd. But then … …

OBIEE

OBIEE

OBIEE

OBIEE

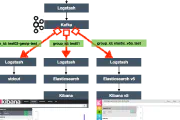

logstash

logstash

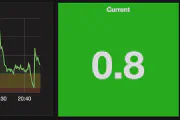

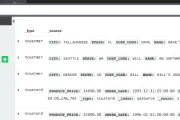

influxdb

influxdb

conferences

conferences

R

R

OBIEE

OBIEE

elasticsearch

elasticsearch

apache kafka

apache kafka

OBIEE

OBIEE

logstash

logstash

inventory

inventory

windows

windows

fedora

fedora

hp

hp

etl

etl

copy_table_stats

copy_table_stats

documentation

documentation

oracle

oracle

io

io

bi

bi