AI will fuck you up if you’re not on board

Yes, you’re right AI slop is ruining the internet. Given half a chance AI will delete your inbox or worse (even if you work in Safety and Alignment at Meta): Nothing humbles you like telling your …

Yes, you’re right AI slop is ruining the internet. Given half a chance AI will delete your inbox or worse (even if you work in Safety and Alignment at Meta): Nothing humbles you like telling your …

AI

AI

AI

AI

Property Graph

Property Graph

Stumbling into AI

Stumbling into AI

Stumbling into AI

Stumbling into AI

AI

AI

Apache Flink

Apache Flink

Apache Iceberg

Apache Iceberg

Apache Iceberg

Apache Iceberg

Flink SQL

Flink SQL

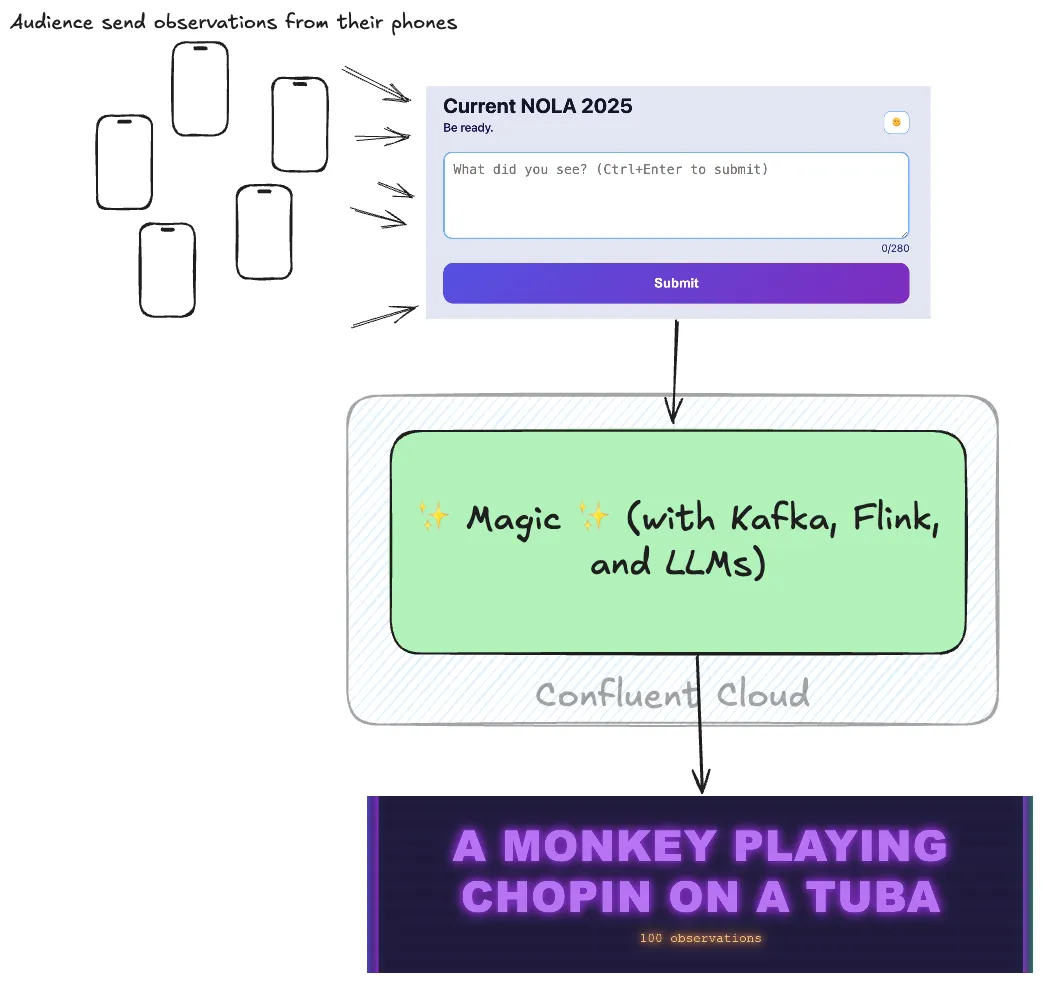

Kafka Summit

Kafka Summit

Apache Flink

Apache Flink

Apache Flink

Apache Flink

LinkedIn

LinkedIn

Confluent Cloud

Confluent Cloud

Blogging

Blogging