How to win [or at least not suck] at the conference abstract submission game

Just over a year ago, I put together the crudely-titled "Quick Thoughts on Not Writing a Crap Abstract" after reviewing a few dozen conference abstracts. This time around I’ve had the honour of being on a conference programme committee and with it the pleasure of reading 250+ abstracts—from which I have some more snarky words of wisdom to impart on the matter.

Remind me…how does this conference game work?

Before we really get into it, let’s recap how this whole game works, because plenty of people are new to conference speaking.

Exploring ksqlDB window start time

Prompted by a question on StackOverflow I had a bit of a dig into how windows behave in ksqlDB, specifically with regards to their start time. This article shows also how to create test data in ksqlDB and create data to be handled with a timestamp in the past.

For a general background to windowing in ksqlDB see the excellent docs.

The nice thing about recent releases of ksqlDB/KSQL is that you can create and populate streams directly with CREATE STREAM and INSERT INTO respectively. Much as I love kafkacat, being able to build a whole example within the ksqlDB CLI is very useful.

Streaming messages from RabbitMQ into Kafka with Kafka Connect

This was prompted by a question on StackOverflow to which I thought the answer would be straightforward, but turned out not to be so. And then I got a bit carried away and ended up with a nice example of how you can handle schema-less data coming from a system such as RabbitMQ and apply a schema to it.

| This same pattern for ingesting bytes and applying a schema will work with other connectors such as MQTT |

Analysing network behaviour with ksqlDB and MongoDB

In this post I want to build on my previous one and show another use of the Syslog data that I’m capturing. Instead of looking for SSH attacks, I’m going to analyse the behaviour of my networking components.

| You can find all the code to run this on GitHub. |

Getting Syslog data into Kafka

As before, let’s create ourselves a syslog connector in ksqlDB:

CREATE SOURCE CONNECTOR SOURCE_SYSLOG_UDP_01 WITH (

'tasks.max' = '1',

'connector.class' = 'io.confluent.connect.syslog.SyslogSourceConnector',

'topic' = 'syslog',

'syslog.port' = '42514',

'syslog.listener' = 'UDP',

'syslog.reverse.dns.remote.ip' = 'true',

'confluent.license' = '',

'confluent.topic.bootstrap.servers' = 'kafka:29092',

'confluent.topic.replication.factor' = '1'

);Detecting and Analysing SSH Attacks with ksqlDB

I’ve written previously about ingesting Syslog into Kafka and using KSQL to analyse it. I want to revisit the subject since it’s nearly two years since I wrote about it and some things have changed since then.

ksqlDB now includes the ability to define connectors from within it, which makes setting things up loads easier.

You can find the full rig to run this on GitHub.

Create and configure the Syslog connector

To start with, create a source connector:

Copy MongoDB collections from remote to local instance

This is revisiting the blog I wrote a while back, which showed using mongodump and mongorestore to copy a MongoDB database from one machine (a Unifi CloudKey) to another. This time instead of a manual lift and shift, I wanted a simple way to automate the update of the target with changes made on the source.

The source is as before, Unifi’s CloudKey, which runs MongoDB to store its data about the network - devices, access points, events, and so on.

Kafka Connect - Request timed out

A short & sweet blog post to help people Googling for this error, and me next time I encounter it.

The scenario: trying to create a connector in Kafka Connect (running in distributed mode, one worker) failed with the curl response

HTTP/1.1 500 Internal Server Error

Date: Fri, 29 Nov 2019 14:33:53 GMT

Content-Type: application/json

Content-Length: 48

Server: Jetty(9.4.18.v20190429)

{"error_code":500,"message":"Request timed out"}Using tcpdump With Docker

I was doing some troubleshooting between two services recently and wanting to poke around to see what was happening in the REST calls between them. Normally I’d reach for tcpdump to do this but imagine my horror when I saw:

root@ksqldb-server:/# tcpdump

bash: tcpdump: command not foundCommon mistakes made when configuring multiple Kafka Connect workers

Kafka Connect can be deployed in two modes: Standalone or Distributed. You can learn more about them in my Kafka Summit London 2019 talk.

I usually recommend Distributed for several reasons:

-

It can scale

-

It is fault-tolerant

-

It can be run on a single node sandbox or a multi-node production environment

-

It is the same configuration method however you run it

I usually find that Standalone is appropriate when:

-

You need to guarantee locality of task execution, such as picking up a log file from a folder on a specific machine

-

You don’t care about scale or fault-tolerance ;-)

-

You like re-learning how to configure something when you realise that you do care about scale or fault-tolerance X-D

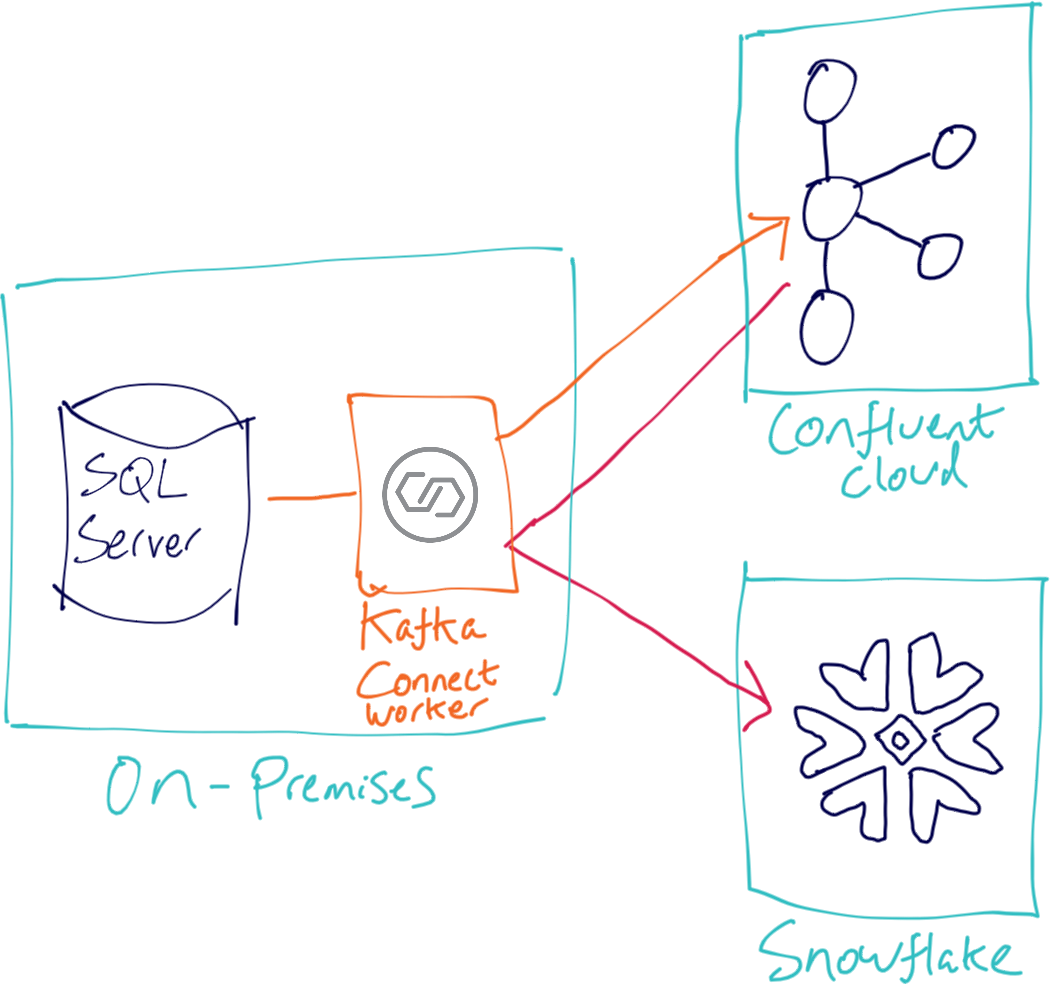

Streaming data from SQL Server to Kafka to Snowflake ❄️ with Kafka Connect

Snowflake is the data warehouse built for the cloud, so let’s get all ☁️ cloudy and stream some data from Kafka running in Confluent Cloud to Snowflake!

What I’m showing also works just as well for an on-premises Kafka cluster. I’m using SQL Server as an example data source, with Debezium to capture and stream and changes from it into Kafka.

I’m assuming that you’ve signed up for Confluent Cloud and Snowflake and are the proud owner of credentials for both. I’m going to use a demo rig based on Docker to provision SQL Server and a Kafka Connect worker, but you can use your own setup if you want.

Running Dockerised Kafka Connect worker on GCP

I talk and write about Kafka and Confluent Platform a lot, and more and more of the demos that I’m building are around Confluent Cloud. This means that I don’t have to run or manage my own Kafka brokers, Zookeeper, Schema Registry, KSQL servers, etc which makes things a ton easier. Whilst there are managed connectors on Confluent Cloud (S3 etc), I need to run my own Kafka Connect worker for those connectors not yet provided. An example is the MQTT source connector that I use in this demo. Up until now I’d either run this worker locally, or manually build a cloud VM. Locally is fine, as it’s all Docker, easily spun up in a single docker-compose up -d command. I wanted something that would keep running whilst my laptop was off, but that was as close to my local build as possible—enter GCP and its functionality to run a container on a VM automagically.

You can see the full script here. The rest of this article just walks through the how and why.

Debezium & MySQL v8 : Public Key Retrieval Is Not Allowed

I started hitting problems when trying Debezium against MySQL v8. When creating the connector:

Using Kafka Connect and Debezium with Confluent Cloud

This is based on using Confluent Cloud to provide your managed Kafka and Schema Registry. All that you run yourself is the Kafka Connect worker.

Optionally, you can use this Docker Compose to run the worker and a sample MySQL database.

Skipping bad records with the Kafka Connect JDBC sink connector

The Kafka Connect framework provides generic error handling and dead-letter queue capabilities which are available for problems with [de]serialisation and Single Message Transforms. When it comes to errors that a connector may encounter doing the actual pull or put of data from the source/target system, it’s down to the connector itself to implement logic around that. For example, the Elasticsearch sink connector provides configuration (behavior.on.malformed.documents) that can be set so that a single bad record won’t halt the pipeline. Others, such as the JDBC Sink connector, don’t provide this yet. That means that if you hit this problem, you need to manually unblock it yourself. One way is to manually move the offset of the consumer on past the bad message.

TL;DR : You can use kafka-consumer-groups --reset-offsets --to-offset <x> to manually move the connector past a bad message

Kafka Connect and Elasticsearch

I use the Elastic stack for a lot of my talks and demos because it complements Kafka brilliantly. A few things have changed in recent releases and this blog is a quick note on some of the errors that you might hit and how to resolve them. It was inspired by a lot of the comments and discussion here and here.

Copying data between Kafka clusters with Kafkacat

kafkacat gives you Kafka super powers 😎

I’ve written before about kafkacat and what a great tool it is for doing lots of useful things as a developer with Kafka. I used it too in a recent demo that I built in which data needed manipulating in a way that I couldn’t easily elsewhere. Today I want share a very simple but powerful use for kafkacat as both a consumer and producer: copying data from one Kafka cluster to another. In this instance it’s getting data from Confluent Cloud down to a local cluster.

Kafka Summit GoldenGate bridge run/walk

Coming to Kafka Summit in San Francisco next week? Inspired by similar events at Oracle OpenWorld in past years, I’m proposing an unofficial run (or walk) across the GoldenGate bridge on the morning of Tuesday 1st October. We should be up and out and back in plenty of time to still attend the morning keynotes. Some people will run, some may prefer to walk, it’s open to everyone :)

Staying sane on the road as a Developer Advocate

I’ve been a full-time Developer Advocate for nearly 1.5 years now, and have learnt lots along the way. The stuff I’ve learnt about being an advocate I’ve written about elsewhere (here/here/here); today I want to write about something that’s just as important: staying sane and looking after yourself whilst on the road. This is also tangentially related to another of my favourite posts that I’ve written: Travelling for Work, with Kids at Home.

Where I’ll be on the road for the remainder of 2019

I’ve had a relaxing couple of weeks off work over the summer, and came back today to realise that I’ve got a fair bit of conference and meetup travel to wrap my head around for the next few months :)

If you’re interested in where I’ll be and want to come and say hi, hear about Kafka—or just grab a coffee or beer, herewith my itinerary as it currently stands.

Reset Kafka Connect Source Connector Offsets

Kafka Connect in distributed mode uses Kafka itself to persist the offsets of any source connectors. This is a great way to do things as it means that you can easily add more workers, rebuild existing ones, etc without having to worry about where the state is persisted. I personally always recommend using distributed mode, even if just for a single worker instance - it just makes things easier, and more standard. Watch my talk online here to understand more about this. If you want to reset the offset of a source connector then you can do so by very carefully modifying the data in the Kafka topic itself.