A short series of notes for myself as I learn more about the AI ecosystem as of Autumn [Fall] 2025. The driver for all this is understanding more about Apache Flink’s Flink Agents project, and Confluent’s Streaming Agents.

I started off this series—somewhat randomly, with hindsight—looking at Model Context Protocol (MCP). It’s a helper technology to make things easier to use and provide a richer experience. Next I tried to wrap my head around Models—mostly LLMs, but also with an addendum discussing other types of model too. Along the lines of MCP, Retrieval Augmented Generation (RAG) is another helper technology that on its own doesn’t do anything but combined with an LLM gives it added smarts. I took a brief moment in part 4 to try and build a clearer understanding of the difference between ML and AI.

So whilst RAG and MCP combined make for a bunch of nice capabilities beyond models such as LLMs alone, what I’m really circling around here is what we can do when we combine all these things: Agents! But…what is an Agent, both conceptually and in practice? Let’s try and figure it out.

Start with the obvious: What is an Agent? 🔗

| Turns out this isn’t so straightforward a question to answer. Below are various definitions and discussions, around which some form of concept starts to coagulate. |

Let’s begin with Wikipedia’s definition:

In computer science, a software agent is a computer program that acts for a user or another program in a relationship of agency.

We can get more specialised if we look at Wikipedia’s entry for an Intelligent Agent:

In artificial intelligence, an intelligent agent is an entity that perceives its environment, takes actions autonomously to achieve goals, and may improve its performance through machine learning or by acquiring knowledge.

|

Citing Wikipedia is perhaps the laziest ever blog author’s trick, but I offer no apologies 😜. Behind all the noise and fuss, this is what we’re talking about: a bit of software that’s going to go and do something for you (or your company) autonomously. |

LangChain have their own definition of an Agent, explicitly identifying the use of an LLM:

An AI agent is a system that uses an LLM to decide the control flow of an application.

The blog post from LangChain as a whole gives more useful grounding in this area and is worth a read. In fact, if you want to really get into it, the LangChain Academy is free and the Introduction to LangGraph course gives a really good primer on Agents and more.

Meanwhile, the Anthropic team have a chat about their definition of an Agent. In a blog post Anthropic differentiates between Workflows (that use LLMs) and Agents:

Workflows are systems where LLMs and tools are orchestrated through predefined code paths. Agents, on the other hand, are systems where LLMs dynamically direct their own processes and tool usage, maintaining control over how they accomplish tasks.

Independent researcher Simon Willison also uses the LLM word in his definition:

An LLM agent runs tools in a loop to achieve a goal.

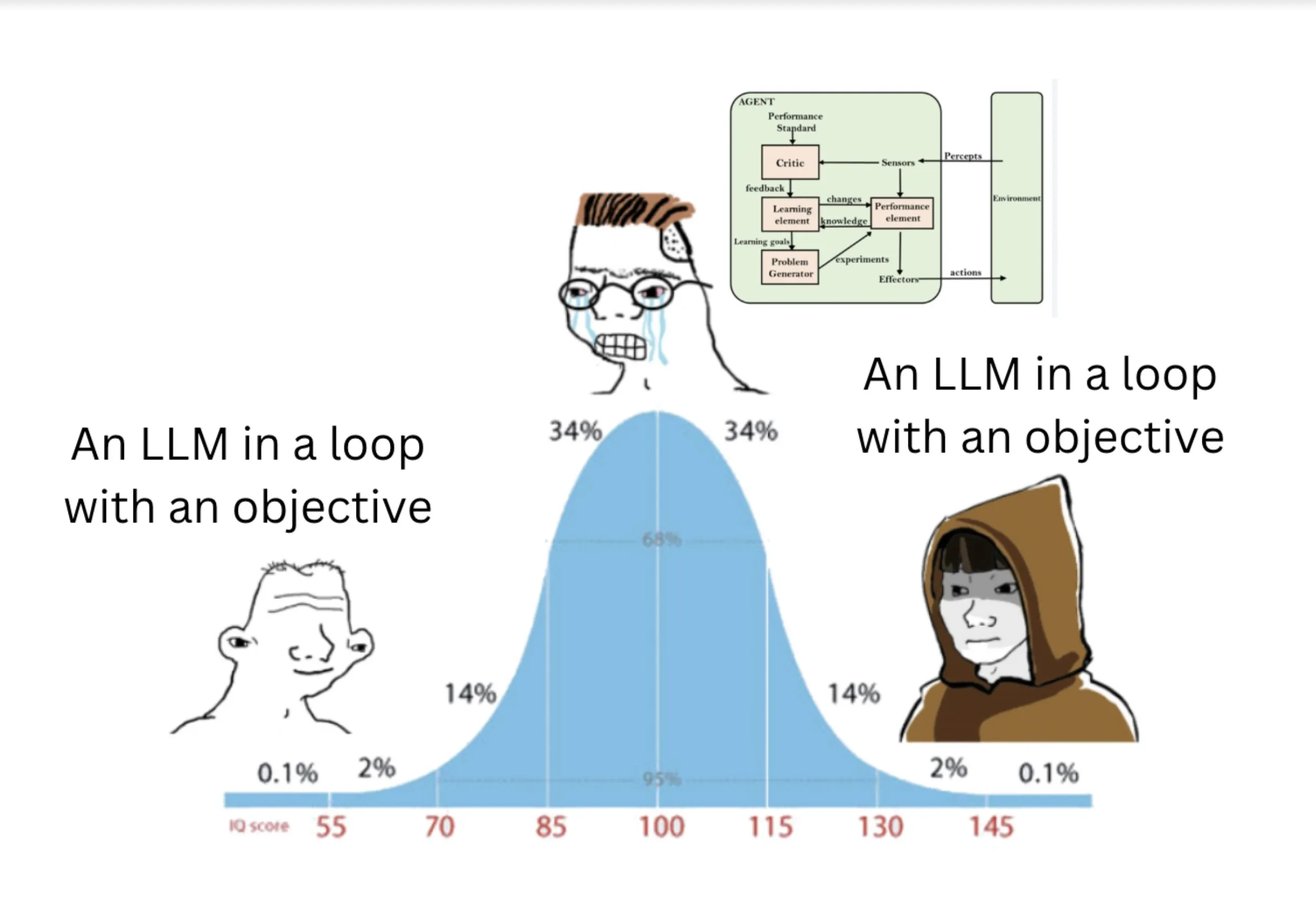

He explores the definition in a recent blog post: I think “agent” may finally have a widely enough agreed upon definition to be useful jargon now, in which Josh Bickett’s meme demonstrates how much of a journey this definition has been on:

That there’s still discussion and ambiguity nearly two years after this meme was created is telling.

My colleague Sean Falconer knows a lot more this than I do. He was a guest on a recent podcast episode in which he spells things out:

[Agentic AI] involves AI systems that can reason, dynamically choose tasks, gather information, and perform actions as a more complete software system. [1]

[Agents] are software that can dynamically decide its own control flow: choosing tasks, workflows, and gathering context as needed. Realistically, current enterprise agents have limited agency[…]. They’re mostly workflow automations rather than fully autonomous systems. [2]

In many ways […] an agent [is] just a microservice. [3]

Okay okay…but what is an [AI] Agent? 🔗

A straightforward software Agent might do something like:

Order more biscuits when there are only two left

The pseudo-code looks like this:

10 BISCUITS == FN_CHECK_BISCUIT_LEVEL()

20 IF BISCUITS < 2 THEN CALL ORDER_MORE_BISCUITS

30 GOTO 10We take this code, stick it on a server and leave it to run. One happy Agent, done.

An AI Agent could look more like this:

10 BISCUITS == FN_CHECK_BISCUIT_LEVEL()

20 IF BISCUITS < 2 THEN

REM (Here's the clever AI stuff 👇)

Look at what biscuits are in stock at the supplier

Work out who is in the office next week

Based on what you know about staff biscuit preferences, choose the best ones that are in stock

Place a biscuit order

30 GOTO 10Other examples of AI Agents include:

-

Coding Agents. Everyone’s favourite tool (when used right). It can reason about code, it can write code, it can review PRs.

One of the trends that I’ve noticed recently (October 2025) is the use of Agents to help with some of the up-front jobs in software engineering (such as data modelling and writing tests), rather than full-blown code that’s going to ship to production. That’s not to say that coding Agents aren’t being used for that, but by using AI to accelerate certain tasks whilst retaining human oversight (a.k.a. HITL) it makes it easier to review the output rather than just trusting to luck that reams and reams of code are correct.

There’s a good talk from Uber on how they’re using AI in the development process, including code conversion, and testing.

-

Travel booking. Perhaps you tell it when you want to go, the kind of vacation you like, and what your budget is; it then goes and finds where it’s nice at that time of year, figures out travel plans within your budget, and either proposes an itinerary or even books it for you. Another variation could be you tell it where, and then it integrates with your calendar to figure out the when.

This is a canonical example that is oft-cited; I’d be interested if anyone can point me to an actual implementation of it, even if just a toy one.

I saw this in a blog post from Simon Willison that made me wince, but am leaving the above in anyway just to serve as an example of the confusion/hype that exists in this space:

Agentic AI? 🔗

Agentic comes from Agent plus ic, the latter meaning of, relating to, or characterised by.

So Agentic AI is simply AI that is characterised by an Agent, or Agency.

Contrast that to AI that’s you sat at the ChatGPT prompt asking it to draw pictures of a duck dressed as a clown.

Nothing Agentic about that—just a human-led and human-driven interaction.

"AI Agents" becomes a bit of a mouthful with the qualifier, so much of the current industry noise is simply around "Agents". That said, "Agentic AI" sounds cool, so gets used as the marketing term in place of "AI" alone.

Building an Agent 🔗

So we’ve muddled our way through to some kind of understanding of what an Agent is, and what we mean by Agentic AI.

But how do we actually build one?

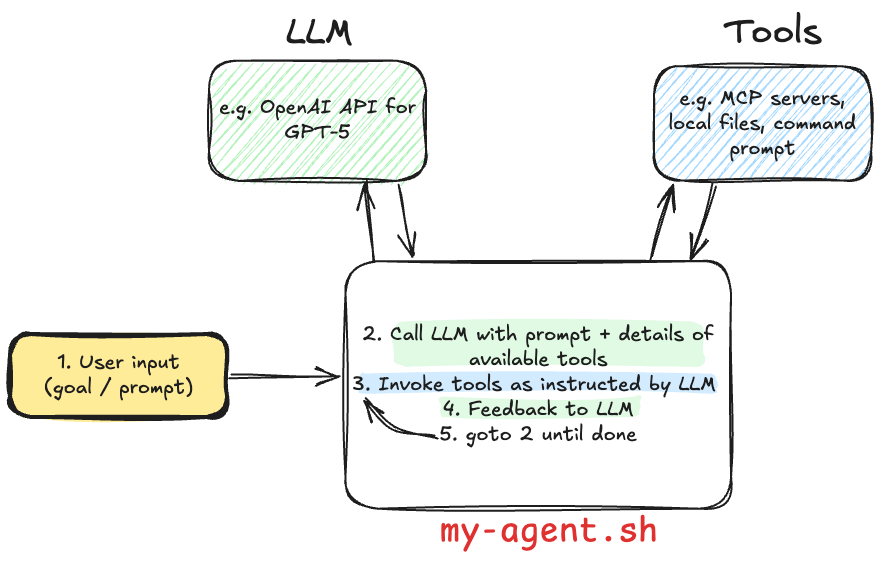

All we need is an LLM (such as access to the API for OpenAI or Claude), something to call that API (there are worse choices than curl!), and a way to call external services (e.g. MCP servers) if the LLM determines that it needs to use them.

So in theory we could build an Agent with some lines of bash, some API calls, and a bunch of sticky-backed plastic.

This is a grossly oversimplified example (and is missing elements such as memory)—but it hopefully illustrates what we’re building at the core of an Agent. On top of this goes all the general software engineering requirements of any system that gets built (suitable programming language and framework, error handling, LLM output validation, guard rails, observability, tests, etc etc).

The other nuance that I’ve noticed is that whilst the above simplistic diagram is 100% driven by an LLM (it decides what tools to call, it decides when to iterate) there are plenty of cases where an Agent is to some degree rules-driven.

So perhaps the LLM does some of the autonomous work, but then there’s a bunch of good ol' IF…ELSE… statements in there too.

This is also borne out by the notion of "Workflows" when people talk about Agents.

An Agent doesn’t wake up in the morning and set out on its day serving only to fulfill its own goals and enrichment.

More often than not an Agent is going to be tightly bound into a pre-defined path with a limited range of autonomy.

What if you want to actually build this kind of thing for real? That’s where tools like LangGraph and LangChain come in. Here’s a notebook with an example of an actual Agent built with these tools. LlamaIndex is another framework, with details of building an Agent in their docs.

Other components of an Agent 🔗

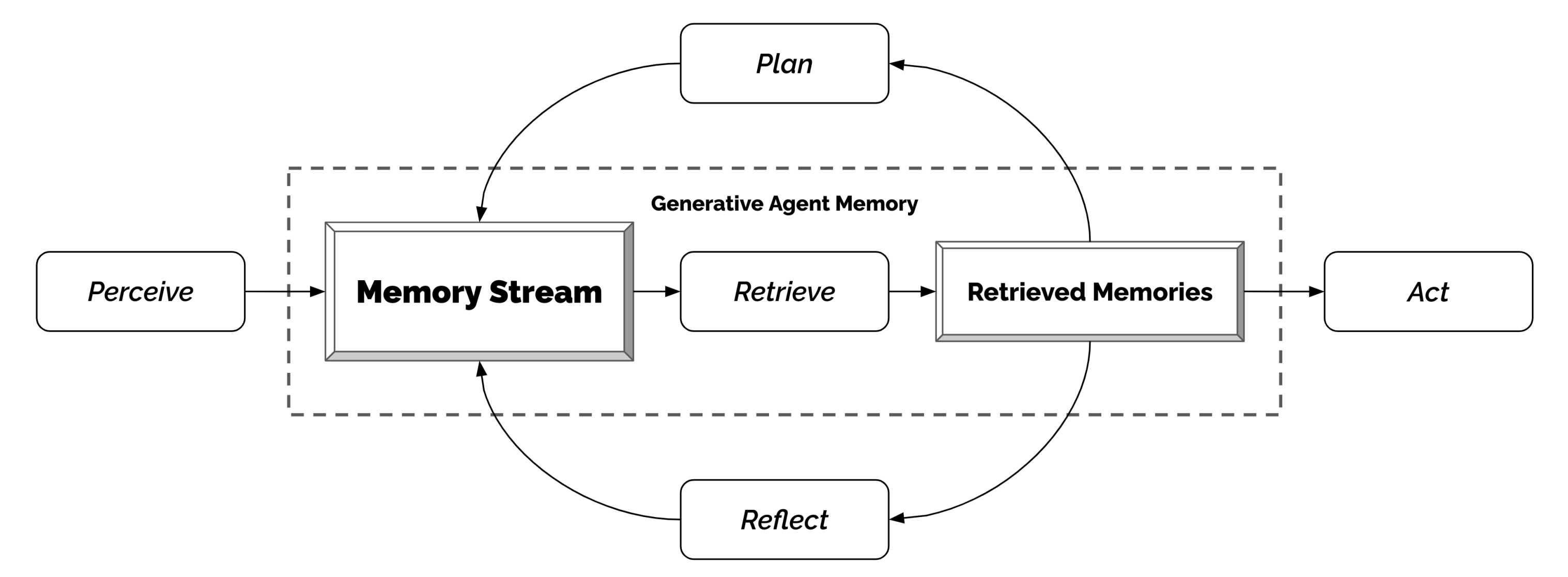

As we build up from the so-simple-it-is-laughable strawman example of an Agent above, one of the features we’ll soon encounter is the concept of memory.

The difference between a crappy response and a holy-shit-that’s-magic response from an LLM is often down to context. The richer the context, the better a chance it has at generating a more accurate output.

So if an Agent can look back on what it did previously, determining what worked well and what didn’t, perhaps even taking into account human feedback, it can then generate a more successful response the next time.

You can read a lot more about memory in this chapter of Agentic Design Patterns by Antonio Gulli. This blog post from "The BIG DATA guy" is also useful: Agentic AI, Agent Memory, & Context Engineering

This diagram from Generative Agents: Interactive Simulacra of Human Behavior (J.S. Park, J.C. O’Brien, C.J. Cai, M.R. Morris, P. Liang, M.S. Bernstein) gives a good overview of a much richer definition of an Agent’s implementation. The additional concepts include memory (discussed briefly above), planning, and reflection:

Also check out Paul Iusztin’s talk from QCon London 2025 on The Data Backbone of LLM Systems. Around the 35-minute mark he goes into some depth around Agent architectures.

Other Agent terminology 🔗

Multi-Agent System (MAS) 🔗

Just as you can build computer systems as monoliths (everything done in one place) or microservices (multiple programs, each responsible for a discrete operation or domain), you can also have one big Agent trying to do everything (probably not such a good idea) or individual Agents each good at their particular thing that are then hooked together into what’s known as a Multi-Agent System (MAS).

Sean Falconer’s family meal planning demo is a good example of a MAS. One Agent plans the kids' meals, one the adults' meals, another combines the two into a single plan, and so on.

Human in the Loop (HITL) 🔗

This is a term you’ll come across referring to the fact that Agents might be pretty good, but they’re not infallible. In the travel booking example above, do we really trust the Agent to book the best holiday for us? Almost certainly we’d want—at a minimum—the option to sign off on the booking before it goes ahead and sinks £10k on an all-inclusive trip to Bognor Regis.

Then again, we’re probably happy enough for an Agent to access our calendars without asking permission, and as to whether they need permission or not to create a meeting is up to us and how much we trust them.

When it comes to coding, having an Agent write code, test it, fix the broken tests, compare it to a spec, and iterate is really neat.

On the other hand, letting it decide to run rm -rf /…less so 😅.

Every time an Agent requires HITL, it reduces its autonomy and/or responsiveness to situations. As well as simply using smarter models that make fewer mistakes, there are other things that an Agent can do to reduce the need for HITL such as using guardrails to define acceptable parameters. For example, an Agent is allowed to book travel but only up to a defined threshold. That way the user gets to trade off convenience (no HITL) with risk (unintended first-class flight to Hawaii).

Further reading 🔗

-

📃 Generative Agents: Interactive Simulacra of Human Behavior

-

🎥 Paul Iusztin - The Data Backbone of LLM Systems - QCon London 2025

-

📖 Antonio Gulli - Agentic Design Patterns

-

📖 Sean Falconer - https://seanfalconer.medium.com/

But…this guy is talking nonsense! 🔗

The purpose of this blog series is for me to take notes as I try to build my understanding of this space. If I’ve got anything wrong, or am missing some important nuances—please let me know in the comments below 😁 👇

Update: It turns out I was talking nonsense! 🔗

Nonsense may be putting it too strongly. But certainly, there was a detail in my understanding of agents that I was weighing wrong in my thinking about them. See what you think: I’ve been thinking about Agents and MCP all wrong.